Explore How Algorithms Adjust Rankings Over Time

Search engine algorithms are not static systems. They are living frameworks that continuously learn, evaluate, and refine how information is ranked and presented to users. When people search online, they expect accurate, relevant, and trustworthy results. To meet these expectations, algorithms adjust rankings over time based on user behavior, content quality, technical signals, and broader web trends. Understanding this gradual adjustment process helps explain why rankings fluctuate, stabilize, or improve without any single visible update.

The Purpose Behind Ranking Adjustments

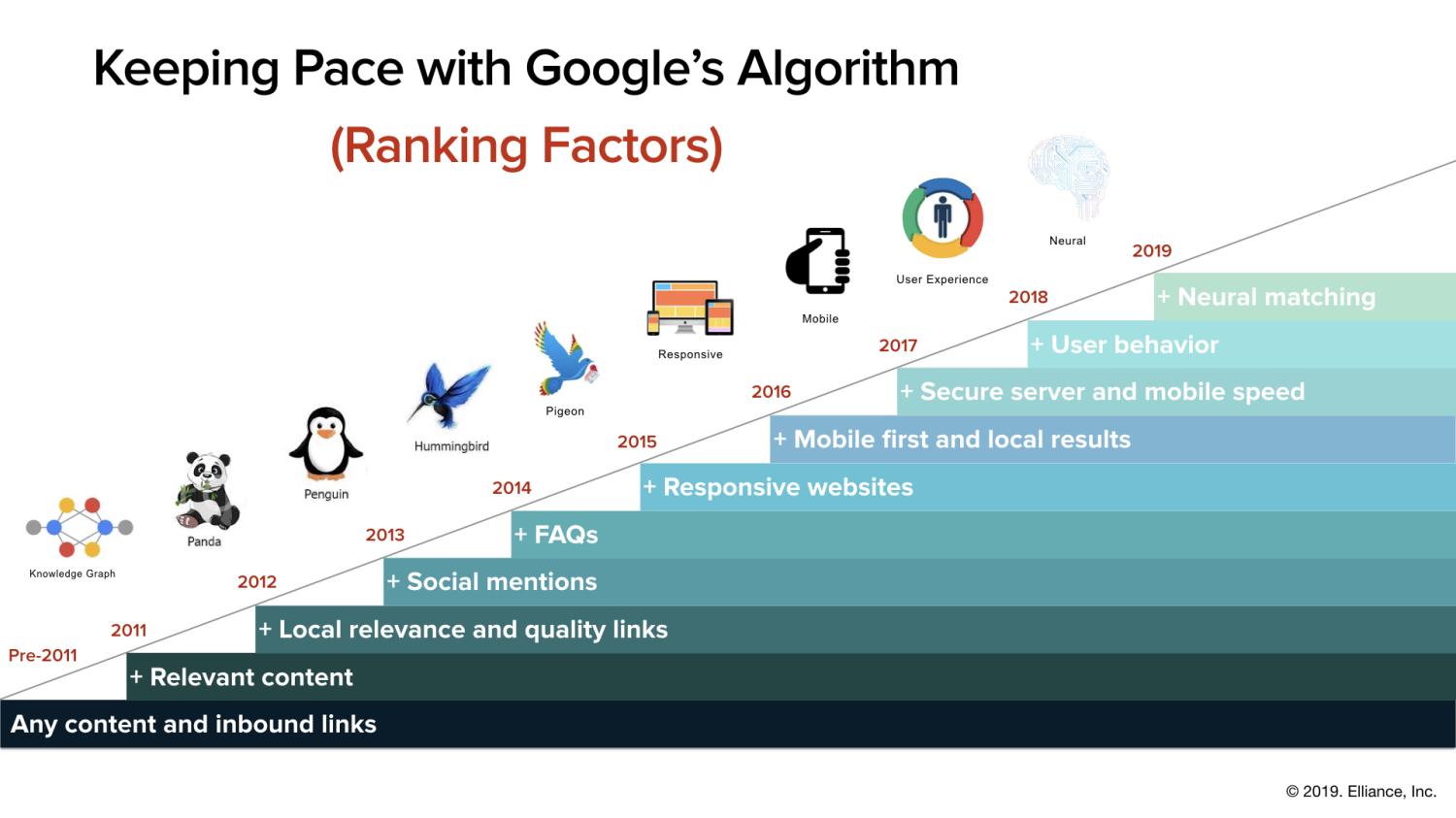

Algorithms exist to serve users first. Every adjustment is designed to improve how search engines interpret intent and match it with the most helpful content. Early search systems relied heavily on basic signals like keywords and backlinks. However, as the web grew more complex, those methods became easy to manipulate. As a result, modern algorithms now focus on relevance, context, experience, and reliability.

Ranking adjustments are not meant to punish websites randomly. Instead, they reflect how well a page aligns with current quality standards. Over time, pages that remain useful and reliable tend to gain stability, while those that rely on outdated tactics slowly lose visibility.

Continuous Evaluation Rather Than Sudden Change

One common misconception is that rankings only change during major updates. In reality, algorithms evaluate websites continuously. Small changes happen daily as new data is collected. User engagement signals, crawling behavior, and content freshness all contribute to ongoing recalculations.

Because of this, ranking movement often feels gradual rather than abrupt. A page may slowly improve as its relevance becomes clearer, or it may decline if better alternatives appear. This continuous evaluation ensures that results remain accurate as the web evolves.

The Role of Content Relevance Over Time

Content relevance is one of the most stable ranking factors, yet it is not fixed forever. A page that was highly relevant two years ago may become outdated if information changes or user intent shifts. Algorithms reassess relevance by analyzing how well content answers current queries.

As new content is published, algorithms compare it against existing pages. If a newer page provides clearer explanations, better structure, or more complete coverage, rankings may gradually adjust. Therefore, maintaining relevance is an ongoing process, not a one-time effort.

User Behavior as a Long-Term Signal

User behavior plays a significant role in how algorithms adjust rankings. Metrics such as time spent on a page, scrolling behavior, and interaction patterns help search engines understand whether users find content useful. These signals are evaluated over time rather than instantly.

If users consistently engage positively with a page, it sends a strong signal that the content meets expectations. On the other hand, repeated dissatisfaction may lead algorithms to reassess that page’s position. Importantly, these signals are aggregated and anonymized, ensuring that trends matter more than individual actions.

Authority and Trust Development

Authority is not granted overnight. Algorithms assess trust through consistent signals across the web. Mentions, references, and contextual links from relevant sources help establish credibility. However, this trust builds gradually.

When a website consistently demonstrates accuracy, transparency, and expertise, algorithms begin to treat it as a reliable source. Over time, this trust leads to more stable rankings. In contrast, sites that attempt shortcuts may see temporary gains but struggle to maintain them as algorithms refine their evaluations.

Technical Quality and Its Ongoing Impact

Technical performance influences how algorithms interpret a website’s usability. Factors such as page speed, mobile accessibility, and crawl efficiency are measured continuously. Improvements in technical structure may not result in immediate ranking jumps, but they support long-term stability.

Similarly, unresolved technical issues can slowly weaken performance. Algorithms may struggle to crawl or understand content properly, leading to gradual visibility loss. This is why technical health is viewed as a foundation rather than a quick fix.

Algorithm Learning and Data Feedback Loops

Modern algorithms rely heavily on machine learning systems. These systems analyze large volumes of data to identify patterns. As more data becomes available, algorithms refine how they weigh different signals.

This learning process creates feedback loops. When an adjustment improves result quality, it reinforces that pattern. When it does not, the system recalibrates. Over time, these refinements make ranking decisions more precise and resistant to manipulation.

Adaptation to Search Intent Changes

Search intent is not static. The meaning behind a query can evolve due to trends, technology, or cultural shifts. Algorithms adjust rankings to reflect these changes.

For example, a query that once implied basic information may later suggest a need for deeper explanation. Algorithms analyze which types of content users prefer and adjust rankings accordingly. Pages that adapt to these intent changes tend to remain visible longer.

The Impact of Competition on Rankings

Rankings are relative, not absolute. Even if a website improves, competitors may improve faster. Algorithms continuously compare pages against each other within the same topic space.

When competitors publish more comprehensive or clearer content, rankings may shift even if no errors exist on the original page. This competitive reassessment ensures that users see the best available options at any given time.

Why Ethical Practices Lead to Stability

Algorithms are designed to reward consistency and authenticity. Ethical practices align naturally with long-term ranking stability. When content is created with genuine user value in mind, algorithm adjustments often work in its favor.

Manipulative tactics, by contrast, tend to lose effectiveness as algorithms learn to identify them. Rather than causing immediate penalties, these tactics often result in slow declines as signals are discounted. This gradual adjustment protects search quality while encouraging sustainable practices.

The Perspective of SEO Professionals

From the viewpoint of an experienced SEO Service Provider Agency, ranking changes are best understood as trends rather than events. Short-term fluctuations are normal, while long-term direction reflects alignment with algorithmic priorities.

Successful strategies focus on understanding why adjustments happen instead of reacting emotionally to every change. This perspective helps maintain clarity and prevents unnecessary over-optimization.

Measuring Progress Over Time

Because algorithms adjust rankings gradually, progress should be measured over extended periods. Is traffic becoming more stable. Is engagement improving. Are impressions growing steadily. These indicators provide clearer insights than daily position checks.

By focusing on patterns rather than isolated movements, website owners can better understand how algorithms perceive their content and make informed improvements.

Preparing for Future Adjustments

Algorithms will continue to evolve. New signals may be introduced, and existing ones may change in importance. However, the underlying principles remain consistent. Help users, provide clarity, and maintain technical reliability.

Websites that focus on these fundamentals are better prepared for future adjustments. Instead of chasing updates, they align with the core purpose of search itself.

Conclusion

Algorithms adjust rankings over time to reflect changing user needs, content quality, and web standards. These adjustments are continuous, data-driven, and focused on long-term relevance rather than short-term reactions. By understanding how and why rankings change, website owners can approach optimization with patience and clarity. Sustainable visibility comes from consistent value, ethical practices, and a deep respect for user experience, which aligns naturally with how modern algorithms are designed to work.

FAQs

Why do rankings change even without making website changes?

Rankings can change because algorithms continuously reevaluate the entire search landscape. Competitors may publish better content, user behavior patterns may shift, or search intent may evolve. Even if a website remains unchanged, its relative position can adjust as new data influences how results are compared and ranked.

How long does it take for algorithm adjustments to reflect improvements?

There is no fixed timeline. Some changes may be reflected within weeks, while others take months. Algorithms need enough data to evaluate whether improvements genuinely benefit users. Consistency and patience are essential, as sudden fluctuations are often part of normal recalibration rather than final outcomes.

Do algorithm updates always cause ranking drops?

No. Updates are designed to improve result quality, not to penalize websites arbitrarily. Some sites gain visibility, others remain stable, and some decline. A drop usually indicates that other pages now better meet user needs or updated quality standards, not that a site has been targeted unfairly.

Can rankings recover naturally over time?

Yes. If a website continues to provide accurate, relevant, and user-friendly content, rankings can stabilize or improve naturally. Algorithms reassess pages continuously, so recovery is possible when quality signals strengthen and align with current search expectations.

How can website owners stay aligned with algorithm changes?

Staying aligned involves focusing on users rather than updates. Clear content structure, reliable information, strong technical foundations, and ethical practices help maintain trust. By monitoring long-term trends instead of reacting to every fluctuation, website owners can adapt smoothly as algorithms evolve.